Getting a Handle on AI Hallucinations

1 min read

Image credit: Carloscastilla via Alamy Stock Photo. Article by John Edwards. InformationWeek – November 11, 2024.

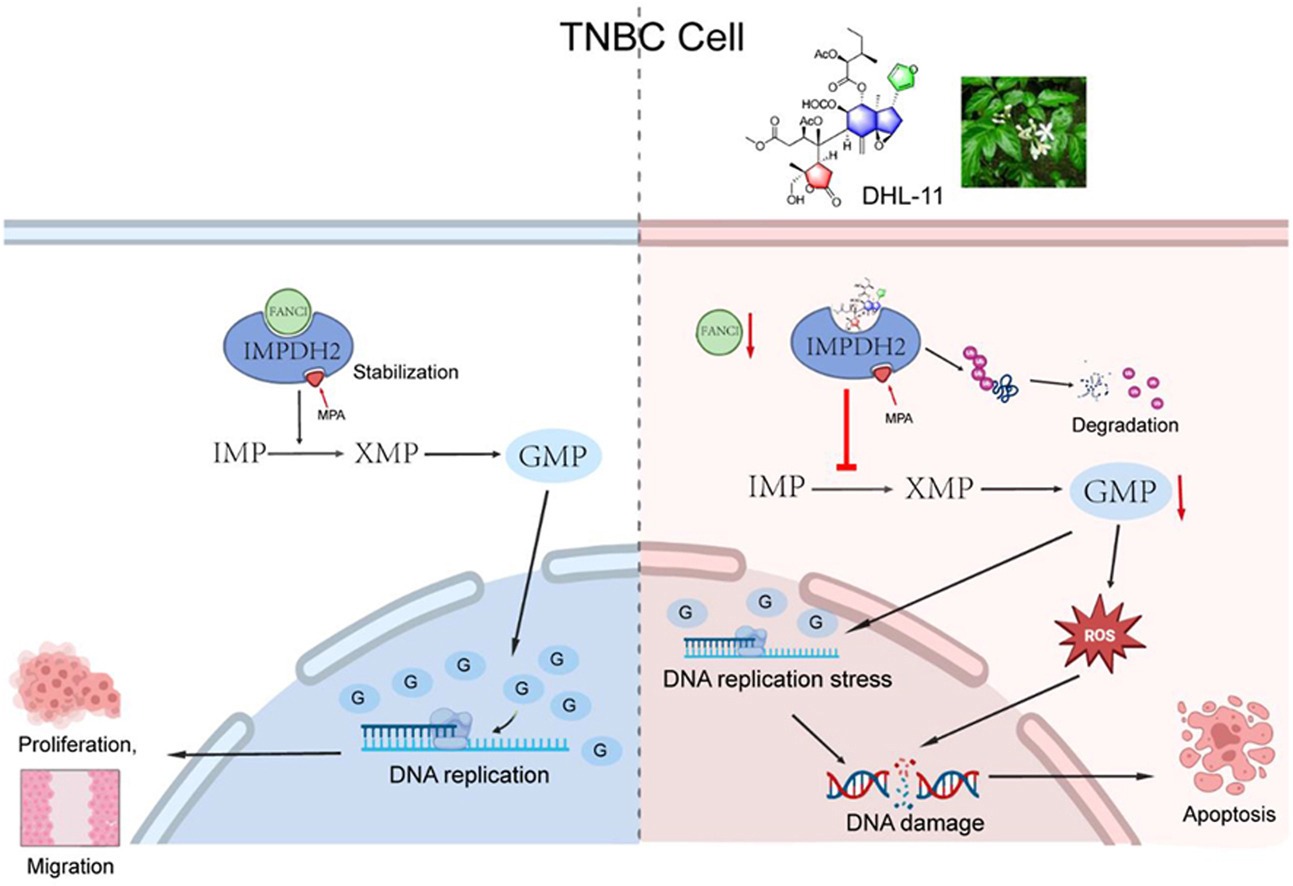

AI hallucination occurs when a large language model (LLM) — frequently a generative AI chatbot or computer vision tool — perceives patterns or objects that are nonexistent or imperceptible to human observers, generating outputs that are either inaccurate or nonsensical. AI hallucinations can pose a significant challenge, […]

Click here to view original web page at www.informationweek.com

DELL

DELL META

META EBAY

EBAY HPE

HPE LUMN

LUMN